I think there is general agreement that the age of the ASP.NET wire-framing post-back web dev is over. If you are going to writing web applications in 2015 in the .NET stack, you have to be able to use java script and associated javascript frameworks like Angular. Similarly, the full-stack developer needs to have a much deeper understanding of the data that is passing in and and out of their application. With the rise of analytics in an application, the developer needs different tools and approaches to their application. Just as you need to know javascript if you are going to be in the browser, you need to know F# if you are going to be building industrial-grade domain and data layers.

I decided to refactor an existing ASP.NET postback website to see how hard it would be to introduce F# to the project and apply some basic statistics to make the site smarter. It was pretty easy and the payoffs were quite large.

If you are not familiar, nerd Dinner is the cannonal example of a MVC application that was created to show Microsoft web devs how to create a website using the .NET stack. The original project was put into a book with the Mount Rushmore of MSFT uber-devs

The project was so successful that it actually was launched into a real website

and you can find the code on Codeplex here

When you download the source code from the repository, you will notice a couple of things:

1) It is not a very big project – with only 1100 lines of code

2) There are 191 FxCop violations

3) It does compile coming out of source, but some of the unit tests fail

4) There is pretty low code coverage (21%)

Focusing on the code coverage issue, it makes sense that there is not much code coverage because there is not much code that can be covered. There is maybe 15 lines of “business logic” if the term business logic is expanded to include input validation. This is an example

Also, there is maybe ten lines of code that do some basic filtering

So step one in the quest to refactor nerd dinner to be a bit smarter was to rename the projects. Since MVC is a UI framework, it made sense to call it that. I then changed the namespaces to reflect the new structure

The next step was to take the domain classes out of the UI and put them into the application. First, I created another project

I then took all of the interfaces that was in the UI and placed them into the application

1 namespace NerdDinner.Models

2

3 open System

4 open System.Linq

5 open System.Linq.Expressions

6

7 type IRepository<'T> =

8 abstract All : IQueryable<'T>

9 abstract AllIncluding

10 : [<ParamArray>] includeProperties:Expression<Func<'T, obj>>[] -> IQueryable<'T>

11 abstract member Find: int -> 'T

12 abstract member InsertOrUpdate: 'T -> unit

13 abstract member Delete: int -> unit

14 abstract member SubmitChanges: unit -> unit

15

16 type IDinnerRepository =

17 inherit IRepository<Dinner>

18 abstract member FindByLocation: float*float -> IQueryable<Dinner>

19 abstract FindUpcomingDinners : unit -> IQueryable<Dinner>

20 abstract FindDinnersByText : string -> IQueryable<Dinner>

21 abstract member DeleteRsvp: 'T -> unit

I then tooks all of the data structures/models and placed them in the application.

1 namespace NerdDinner.Models

2

3 open System

4 open System.Web.Mvc

5 open System.Collections.Generic

6 open System.ComponentModel.DataAnnotations

7 open System.ComponentModel.DataAnnotations.Schema

8

9 type public LocationDetail (latitude,longitude,title,address) =

10 let mutable latitude = latitude

11 let mutable longitude = longitude

12 let mutable title = title

13 let mutable address = address

14

15 member public this.Latitude

16 with get() = latitude

17 and set(value) = latitude <- value

18

19 member public this.Longitude

20 with get() = longitude

21 and set(value) = longitude <- value

22

23 member public this.Title

24 with get() = title

25 and set(value) = title <- value

26

27 member public this.Address

28 with get() = address

29 and set(value) = address <- value

30

31 type public RSVP () =

32 let mutable rsvpID = 0

33 let mutable dinnerID = 0

34 let mutable attendeeName = ""

35 let mutable attendeeNameId = ""

36 let mutable dinner = null

37

38 member public self.RsvpID

39 with get() = rsvpID

40 and set(value) = rsvpID <- value

41

42 member public self.DinnerID

43 with get() = dinnerID

44 and set(value) = dinnerID <- value

45

46 member public self.AttendeeName

47 with get() = attendeeName

48 and set(value) = attendeeName <- value

49

50 member public self.AttendeeNameId

51 with get() = attendeeNameId

52 and set(value) = attendeeNameId <- value

53

54 member public self.Dinner

55 with get() = dinner

56 and set(value) = dinner <- value

57

58

59 and public Dinner () =

60 let mutable dinnerID = 0

61 let mutable title = ""

62 let mutable eventDate = DateTime.MinValue

63 let mutable description = ""

64 let mutable hostedBy = ""

65 let mutable contactPhone = ""

66 let mutable address = ""

67 let mutable country = ""

68 let mutable latitude = 0.

69 let mutable longitude = 0.

70 let mutable hostedById = ""

71 let mutable rsvps = List<RSVP>() :> ICollection<RSVP>

72

73 [<HiddenInput(DisplayValue=false)>]

74 member public self.DinnerID

75 with get() = dinnerID

76 and set(value) = dinnerID <- value

77

78 [<Required(ErrorMessage="Title Is Required")>]

79 [<StringLength(50,ErrorMessage="Title may not be longer than 50 characters")>]

80 member public self.Title

81 with get() = title

82 and set(value) = title <- value

83

84 [<Required(ErrorMessage="EventDate Is Required")>]

85 [<Display(Name="Event Date")>]

86 member public self.EventDate

87 with get() = eventDate

88 and set(value) = eventDate <- value

89

90 [<Required(ErrorMessage="Description Is Required")>]

91 [<StringLength(256,ErrorMessage="Description may not be longer than 256 characters")>]

92 [<DataType(DataType.MultilineText)>]

93 member public self.Description

94 with get() = description

95 and set(value) = description <- value

96

97 [<StringLength(256,ErrorMessage="Hosted By may not be longer than 256 characters")>]

98 [<Display(Name="Hosted By")>]

99 member public self.HostedBy

100 with get() = hostedBy

101 and set(value) = hostedBy <- value

102

103 [<Required(ErrorMessage="Contact Phone Is Required")>]

104 [<StringLength(20,ErrorMessage="Contact Phone may not be longer than 20 characters")>]

105 [<Display(Name="Contact Phone")>]

106 member public self.ContactPhone

107 with get() = contactPhone

108 and set(value) = contactPhone <- value

109

110 [<Required(ErrorMessage="Address Is Required")>]

111 [<StringLength(20,ErrorMessage="Address may not be longer than 50 characters")>]

112 [<Display(Name="Address")>]

113 member public self.Address

114 with get() = address

115 and set(value) = address <- value

116

117 [<UIHint("CountryDropDown")>]

118 member public this.Country

119 with get() = country

120 and set(value) = country <- value

121

122 [<HiddenInput(DisplayValue=false)>]

123 member public self.Latitude

124 with get() = latitude

125 and set(value) = latitude <- value

126

127 [<HiddenInput(DisplayValue=false)>]

128 member public v.Longitude

129 with get() = longitude

130 and set(value) = longitude <- value

131

132 [<HiddenInput(DisplayValue=false)>]

133 member public self.HostedById

134 with get() = hostedById

135 and set(value) = hostedById <- value

136

137 member public self.RSVPs

138 with get() = rsvps

139 and set(value) = rsvps <- value

140

141 member public self.IsHostedBy (userName:string) =

142 System.String.Equals(hostedBy,userName,System.StringComparison.Ordinal)

143

144 member public self.IsUserRegistered(userName:string) =

145 rsvps |> Seq.exists(fun r -> r.AttendeeName = userName)

146

147

148 [<UIHint("Location Detail")>]

149 [<NotMapped()>]

150 member public self.Location

151 with get() = new LocationDetail(self.Latitude,self.Longitude,self.Title,self.Address)

152 and set(value:LocationDetail) =

153 let latitude = value.Latitude

154 let longitude = value.Longitude

155 let title = value.Title

156 let address = value.Address

157 ()

Unlike C# where there is a class per file, all of the related elements are placed into a the same location. Also, notice that the absence of semi-colons, curly braces, and other distracting characters, and finally you can see that because were are in the .NET framework, all of the data annotations are the same. Sure enough, pointing the MVC UI to the application and hitting run, the application just works.

With the separation complete, it was time time to make our app much smarter. The first thing that I thought of was when the person creates an account, they enter their first and last name

This seems like an excellent opportunity to add some user manipulation personalization to our site. Going back to this analysis of names gives to newborns in the United States, if I know your first name, I have a pretty good chance of guessing your age/gender/and state of birth. For example ‘Jose’ is probably a male born in his twenties in either Texas or California. ‘James’ is probably a male in his 40s or 50s.

I added 6 pictures to the site for young,middleAged, and old males and females.

I then modified the logonStatus partial view like so

1 @using NerdDinner.UI;

2

3

4 @if(Request.IsAuthenticated) {

5 <text>Welcome <b>@(((NerdIdentity)HttpContext.Current.User.Identity).FriendlyName)</b>!

6 [ @Html.ActionLink("Log Off", "LogOff", "Account") ]</text>

7 }

8 else {

9 @:[ @Html.ActionLink("Log On", "LogOn", new { controller = "Account", returnUrl = HttpContext.Current.Request.RawUrl }) ]

10 }

11

12 @if (Session["adUri"] != null)

13 {

14 <img alt="product placement" title="product placement" src="@Session["adUri"]" height="40" />

15 }

Then, I created a session variable called adUri that the picture will reference in the Logon controller

1 public ActionResult LogOn(LogOnModel model, string returnUrl)

2 {

3 if (ModelState.IsValid)

4 {

5 if (ValidateLogOn(model.UserName, model.Password))

6 {

7 // Make sure we have the username with the right capitalization

8 // since we do case sensitive checks for OpenID Claimed Identifiers later.

9 string userName = MembershipService.GetCanonicalUsername(model.UserName);

10

11 FormsAuth.SignIn(userName, model.RememberMe);

12

13 AdProvider adProvider = new AdProvider();

14 String catagory = adProvider.GetCatagory(userName);

15 Session["adUri"] = "/Content/images/" + catagory + ".png";

16

And finally, I added an implementation of the adProvider back in the application:

1 type AdProvider () =

2 member this.GetCatagory personName: string =

3 "middleAgedMale"

So running the app, we have a product placement for a Middle Aged Male

So the last thing to do is to turn names into those categories. I thought of a couple of different implementations: loading the entire census data set and searching it on demand, I then thought about using Azure ML and making a API request each time, I then decided into just creating a lookup table that can be searched. In any event, since I am using an interface, swapping out implementations is easy and since I am using F#, creating implementations is easy.

I went back to my script file that analyzed the baby names from the US census and created a new script. I loaded the names into memory like before

1 #r "C:/Git/NerdChickenChicken/04_mvc3_Working/packages/FSharp.Data.2.0.14/lib/net40/FSharp.Data.dll"

2

3 open FSharp.Data

4

5 type censusDataContext = CsvProvider<"https://portalvhdspgzl51prtcpfj.blob.core.windows.net/censuschicken/AK.TXT">

6 type stateCodeContext = CsvProvider<"https://portalvhdspgzl51prtcpfj.blob.core.windows.net/censuschicken/states.csv">

7

8 let stateCodes = stateCodeContext.Load("https://portalvhdspgzl51prtcpfj.blob.core.windows.net/censuschicken/states.csv");

9

10 let fetchStateData (stateCode:string)=

11 let uri = System.String.Format("https://portalvhdspgzl51prtcpfj.blob.core.windows.net/censuschicken/{0}.TXT",stateCode)

12 censusDataContext.Load(uri)

13

14 let usaData = stateCodes.Rows

15 |> Seq.collect(fun r -> fetchStateData(r.Abbreviation).Rows)

16 |> Seq.toArray

17

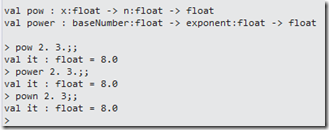

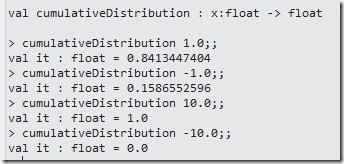

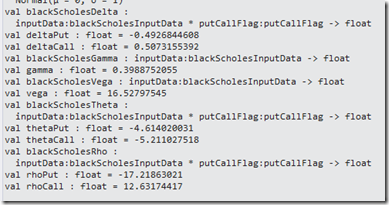

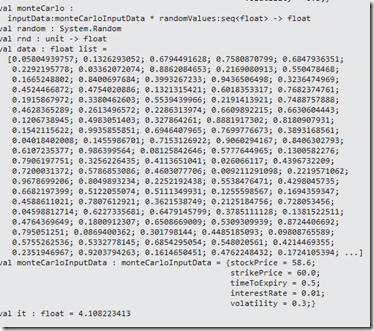

I then created a function that tells the probability of male

1 let genderSearch name =

2 let nameFilter = usaData

3 |> Seq.filter(fun r -> r.Mary = name)

4 |> Seq.groupBy(fun r -> r.F)

5 |> Seq.map(fun (n,a) -> n,a |> Seq.sumBy(fun (r) -> r.``14``))

6

7 let nameSum = nameFilter |> Seq.sumBy(fun (n,c) -> c)

8 nameFilter

9 |> Seq.map(fun (n,c) -> n, c, float c/float nameSum)

10 |> Seq.filter(fun (g,c,p) -> g = "M")

11 |> Seq.map(fun (g,c,p) -> p)

12 |> Seq.head

13

14 genderSearch "James"

15

I then created a function that calculated the year the last name was popular (using 1 standard deviation away)

1 let ageSearch name =

2 let nameFilter = usaData

3 |> Seq.filter(fun r -> r.Mary = name)

4 |> Seq.groupBy(fun r -> r.``1910``)

5 |> Seq.map(fun (n,a) -> n,a |> Seq.sumBy(fun (r) -> r.``14``))

6 |> Seq.toArray

7 let nameSum = nameFilter |> Seq.sumBy(fun (n,c) -> c)

8 nameFilter

9 |> Seq.map(fun (n,c) -> n, c, float c/float nameSum)

10 |> Seq.toArray

11

12 let variance (source:float seq) =

13 let mean = Seq.average source

14 let deltas = Seq.map(fun x -> pown(x-mean) 2) source

15 Seq.average deltas

16

17 let standardDeviation(values:float seq) =

18 sqrt(variance(values))

19

20 let standardDeviation' name = ageSearch name

21 |> Seq.map(fun (y,c,p) -> float c)

22 |> standardDeviation

23

24 let average name = ageSearch name

25 |> Seq.map(fun (y,c,p) -> float c)

26 |> Seq.average

27

28 let attachmentPoint name = (average name) + (standardDeviation' name)

29

30 let popularYears name =

31 let allYears = ageSearch name

32 let attachmentPoint' = attachmentPoint name

33 let filteredYears = allYears

34 |> Seq.filter(fun (y,c,p) -> float c > attachmentPoint')

35 |> Seq.sortBy(fun (y,c,p) -> y)

36 filteredYears

37

38 let lastPopularYear name = popularYears name |> Seq.last

39 let firstPopularYear name = popularYears name |> Seq.head

40

41 lastPopularYear "James"

42

And then created a function that takes in the gender probability of being male and the last year the name was poular and assigns the name into a category:

1 let nameAssignment (malePercent, lastYearPopular) =

2 match malePercent > 0.75, malePercent < 0.75, lastYearPopular < 1945, lastYearPopular > 1980 with

3 | true, false, true, false -> "oldMale"

4 | true, false, false, false -> "middleAgedMale"

5 | true, false, false, true -> "youngMale"

6 | false, true, true, false -> "oldFemale"

7 | false, true, false, false -> "middleAgedFemale"

8 | false, true, false, true -> "youngFeMale"

9 | _,_,_,_ -> "unknown"

And then it was a matter of tying the functions together for each of the names in the master list:

1 let nameList = usaData

2 |> Seq.map(fun r -> r.Mary)

3 |> Seq.distinct

4

5 nameList

6 |> Seq.map(fun n -> n, genderSearch n)

7 |> Seq.map(fun (n,mp) -> n,mp, lastPopularYear n)

8 |> Seq.map(fun (n,mp,(y,c,p)) -> n, mp, y)

9

10 let nameList' = nameList

11 |> Seq.map(fun n -> n, genderSearch n)

12 |> Seq.map(fun (n,mp) -> n,mp, lastPopularYear n)

13 |> Seq.map(fun (n,mp,(y,c,p)) -> n, mp, y)

14 |> Seq.map(fun (n,mp,y) -> n,nameAssignment(mp,y))

15

And then write the list out to a file

1 open System.IO

2 let outFile = new StreamWriter(@"c:\data\nameList.csv")

3

4 nameList' |> Seq.iter(fun (n,c) -> outFile.WriteLine(sprintf "%s,%s" n c))

5 outFile.Flush

6 outFile.Close()

Thanks to this stack overflow post for the file write (I wish the csv type provider had this ability). With the file created, I can then use the file as a lookup for my name function back in the MVC app using a csv type provider

1 type nameMappingContext = CsvProvider<"C:/data/nameList.csv">

2

3 type AdProvider () =

4 member this.GetCatagory personName: string =

5 let nameList = nameMappingContext.Load("C:/data/nameList.csv")

6 let foundName = nameList.Rows

7 |> Seq.filter(fun r -> r.Annie = personName)

8 |> Seq.map(fun r -> r.oldFemale)

9 |> Seq.toArray

10 if foundName.Length > 0 then

11 foundName.[0]

12 else

13 "middleAgedMale"

And now I have some (basic) personalization to Nerd Dinner. (Emma is a young female name so they get a picturer of a campground)

So this a rather crude. There is no provision for nicknames, case-sensitivity, etc. But the site is along the way to becoming smarter…

The code can be found on github here.