Consuming Azure ML With F#

September 16, 2014 2 Comments

(This post is a continuation of this one)

So with a model that works well enough, I selected only that model and saved it

Created a new experiment and used that model with the base data. I then marked the project columns as the input and the score as the output (green and blue circle respectively)

After running it, I published it as a web service

And voila, an endpoint ready to go. I then took the auto generated script and opened up a new Visual Studio F# project to use it. The problem was that this is the data structure that the model needs

FeatureVector = new Dictionary<string, string>() { { "Precinct", "0" }, { "VRN", "0" }, { "VRstatus", "0" }, { "VRlastname", "0" }, { "VRfirstname", "0" }, { "VRmiddlename", "0" }, { "VRnamesufx", "0" }, { "VRstreetnum", "0" }, { "VRstreethalfcode", "0" }, { "VRstreetdir", "0" }, { "VRstreetname", "0" }, { "VRstreettype", "0" }, { "VRstreetsuff", "0" }, { "VRstreetunit", "0" }, { "VRrescity", "0" }, { "VRstate", "0" }, { "Zip Code", "0" }, { "VRfullresstreet", "0" }, { "VRrescsz", "0" }, { "VRmail1", "0" }, { "VRmail2", "0" }, { "VRmail3", "0" }, { "VRmail4", "0" }, { "VRmailcsz", "0" }, { "Race", "0" }, { "Party", "0" }, { "Gender", "0" }, { "Age", "0" }, { "VRregdate", "0" }, { "VRmuni", "0" }, { "VRmunidistrict", "0" }, { "VRcongressional", "0" }, { "VRsuperiorct", "0" }, { "VRjudicialdistrict", "0" }, { "VRncsenate", "0" }, { "VRnchouse", "0" }, { "VRcountycomm", "0" }, { "VRschooldistrict", "0" }, { "11/6/2012", "0" }, { "Voted Ind", "0" }, }, GlobalParameters = new Dictionary<string, string>() { } };

And since I am only using 6 of the columns, it made sense to reload the Wake County Voter Data with just the needed columns. I went back to the original CSV and did that. Interestingly, I could not set the original dataset as the publish input so I added a project column module that does nothing

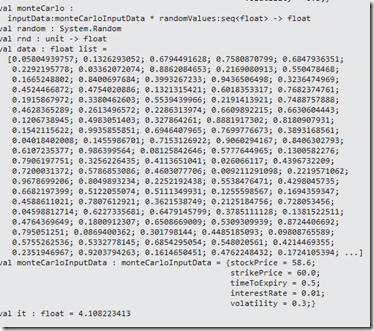

With that in place, I republished the service and opened Visual Studio. I decided to start with a script. I was struggling though the async when Tomas P helped me on Stack Overflow here. I’ll say it again, the F# community is tops. In any event, here is the initial script:

#r @"C:\Program Files (x86)\Reference Assemblies\Microsoft\Framework\.NETFramework\v4.5\System.Net.Http.dll" #r @"..\packages\Microsoft.AspNet.WebApi.Client.5.2.2\lib\net45\System.Net.Http.Formatting.dll" open System open System.Net.Http open System.Net.Http.Headers open System.Net.Http.Formatting open System.Collections.Generic type scoreData = {FeatureVector:Dictionary<string,string>;GlobalParameters:Dictionary<string,string>} type scoreRequest = {Id:string; Instance:scoreData} let invokeService () = async { let apiKey = "" let uri = "https://ussouthcentral.services.azureml.net/workspaces/19a2e623b6a944a3a7f07c74b31c3b6d/services/f51945a42efa42a49f563a59561f5014/score" use client = new HttpClient() client.DefaultRequestHeaders.Authorization <- new AuthenticationHeaderValue("Bearer",apiKey) client.BaseAddress <- new Uri(uri) let input = new Dictionary<string,string>() input.Add("Zip Code","27519") input.Add("Race","W") input.Add("Party","UNA") input.Add("Gender","M") input.Add("Age","45") input.Add("Voted Ind","1") let instance = {FeatureVector=input; GlobalParameters=new Dictionary<string,string>()} let scoreRequest = {Id="score00001";Instance=instance} let! response = client.PostAsJsonAsync("",scoreRequest) |> Async.AwaitTask let! result = response.Content.ReadAsStringAsync() |> Async.AwaitTask if response.IsSuccessStatusCode then printfn "%s" result else printfn "FAILED: %s" result response |> ignore } invokeService() |> Async.RunSynchronously

Unfortunately, when I run it, it fails. Below is the Fiddler trace:

So it looks like the Json Serializer is postpending the “@” symbol. I changed the records to types and voila:

You can see the final script here.

So then throwing in some different numbers.

- A millennial: ["27519","W","D","F","25","1","1","0.62500011920929"]

- A senior citizen: ["27519","W","D","F","75","1","1","0.879632294178009"]

I wonder why social security never gets cut?

In any event, just to check the model:

- A 15 year old: ["27519","W","D","F","15","1","0","0.00147285079583526"]